No-Code Open Platform Database Creation: Empowering Services to Construct Faster

No-Code Open Platform Database Creation: Empowering Services to Construct Faster

Blog Article

A Comprehensive Overview to Carrying Out Scalable Data Sources Without the Demand for Coding Know-how

In the contemporary landscape of data monitoring, the capability to carry out scalable data sources without coding proficiency is becoming increasingly essential for organizations of all dimensions. This overview intends to light up the process, focusing on easy to use tools and instinctive user interfaces that debunk data source arrangement. By taking a look at vital attributes, efficient approaches for execution, and finest techniques for ongoing monitoring, we will certainly address just how even non-technical customers can confidently browse this facility terrain. What are the essential aspects that can truly encourage these users to take advantage of scalable data sources successfully? The responses may redefine your approach to information monitoring.

Understanding Scalable Databases

In the world of contemporary information management, scalable databases have actually become a critical solution for organizations seeking to manage increasing quantities of details successfully. These data sources are made to fit growth by enabling the seamless enhancement of sources, whether with straight scaling (including more devices) or upright scaling (upgrading existing makers) This adaptability is vital in today's hectic electronic landscape, where information is generated at an unprecedented rate.

Scalable data sources commonly use distributed designs, which enable data to be spread throughout multiple nodes. This distribution not just boosts performance however also supplies redundancy, making certain data availability also in the event of equipment failures. Scalability can be an important factor for numerous applications, consisting of ecommerce systems, social media sites networks, and huge information analytics, where user need can vary dramatically.

Additionally, scalable databases frequently feature robust data consistency designs that balance performance and dependability. Organizations must consider their certain needs, such as read and compose rates, information integrity, and fault tolerance when choosing a scalable database option. Eventually, comprehending the underlying principles of scalable data sources is necessary for businesses intending to prosper in a significantly data-driven globe.

Secret Features to Look For

When reviewing scalable databases, numerous vital functions are paramount to making sure ideal efficiency and reliability. First and foremost, consider the design of the data source. A distributed architecture can improve scalability by permitting data to be stored across multiple nodes, promoting seamless information accessibility and processing as need increases.

One more essential feature is information partitioning, which allows effective administration of big datasets by dividing them into smaller sized, much more workable pieces (no-code). This method not only enhances performance but likewise simplifies resource allocation

Furthermore, try to find durable duplication capabilities. This function makes sure data redundancy and high availability, minimizing downtime during upkeep or unexpected failures.

Efficiency tracking tools are likewise important, as they give real-time insights right into system health and wellness and functional performance, allowing for prompt changes to keep optimal efficiency.

User-Friendly Database Equipment

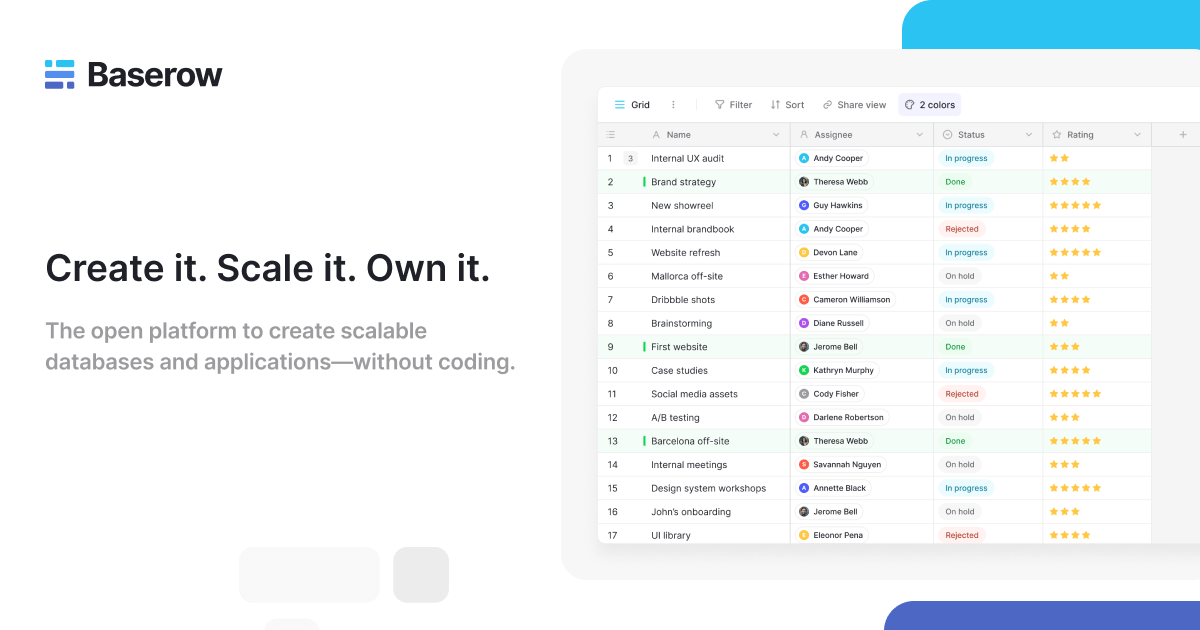

Simplicity is an important element in the design of easy to use database devices, as it boosts availability for individuals with differing degrees of technical proficiency. no-code. These tools prioritize user-friendly interfaces, enabling customers to develop, take care of, and query databases without calling for extensive programming knowledge

Secret features generally consist of drag-and-drop capability, aesthetic information modeling, and pre-built design templates that simplify the arrangement process. Such tools frequently supply led tutorials or onboarding processes that assist in individual engagement and reduce the discovering curve. Additionally, smooth integration with prominent information sources and solutions makes sure that individuals can easily import and export information, even more streamlining operations.

Furthermore, robust assistance and neighborhood resources, such as online forums and documentation, enhance the user experience by providing assistance when required. In general, easy to use database tools encourage companies to harness the power of scalable data sources, making data monitoring easily accessible to everyone entailed.

Step-by-Step Implementation Overview

Just how can organizations effectively execute scalable data sources to meet their expanding data requirements? The process begins with identifying specific data requirements, consisting of the quantity, range, and rate of data that will be processed. Next off, organizations need to assess user-friendly data source devices that use scalability features, such as cloud-based remedies or took care of database services.

Once the ideal device is chosen, the following action involves configuring the database environment. This consists of establishing up instances, defining customer permissions, and developing data structures that line up with company objectives. Organizations should then move existing data right into the brand-new system, making certain data stability and very little disruption to procedures.

Post-migration, conducting complete testing is essential; this consists of performance testing under various load problems to make sure the system can take care of future growth - no-code. Furthermore, it is necessary to train team on the data source monitoring interface to assist in smooth usage

Best Practices for Monitoring

Reliable management of scalable data sources needs a calculated strategy that focuses on ongoing monitoring and optimization. To accomplish this, companies ought to implement robust surveillance tools that offer real-time insights into database performance metrics, such as query response times, resource utilization, and deal throughput. Consistently assessing these metrics can aid identify traffic jams and areas for enhancement.

Normal back-ups and calamity recuperation plans are important to secure information integrity and availability. Developing a routine for evaluating these backups will guarantee a dependable healing process in situation of an unforeseen failing.

In addition, performance adjusting need to be a continuous procedure. Adjusting indexing methods, maximizing queries, and scaling resourcesâEUR" whether up and down or horizontallyâEUR" will assist keep optimal performance as usage needs progress.

Finally, promoting a society of knowledge sharing amongst staff member will certainly allow constant understanding and adjustment, making sure that the administration of scalable data sources continues to be effective and reliable over time.

Conclusion

Finally, the implementation of scalable data sources can be effectively attained without coding experience with the usage of user-friendly interfaces and easy to use original site tools. By sticking to the laid out strategies for configuration, data migration, and efficiency screening, people can navigate the complexities of database administration effortlessly. Highlighting finest methods for recurring upkeep and collaboration more enhances the capacity to handle scalable databases efficiently in a swiftly developing data-driven atmosphere.

In the modern landscape of information administration, the ability to implement scalable databases without coding competence is becoming increasingly important for companies of all dimensions.In the realm of contemporary data management, scalable data sources have actually arised as an important service for companies looking for to deal with increasing quantities of details effectively.Moreover, scalable databases commonly feature durable information uniformity useful link models that stabilize efficiency and dependability.Exactly how can organizations properly apply scalable databases to satisfy their growing information needs? Next, organizations should review straightforward database tools that offer scalability functions, such as cloud-based options or managed database services.

Report this page